Why the robots are (not) coming (quite yet), all you need to know about the global state of AI, what happens when AI never forgets? How to use AI to run background checks.

Why the robots are (not) coming for us (yet)

As a child, one of my favourite pastimes was reading comics. Future-focused, even then, I loved a particular storyline in which slick androids competed in the Olympics and marathons were won in minutes rather than hours. So it was with much anticipation that I read last week’s coverage of the 20-odd robots that lined up to compete in the Beijing half marathon.

I’ve been bigging up the advancements in robotics for a while now, and ‘jaw-dropping’ clips of machines putting away the groceries have tempted many a foolish investor to throw common sense into the recycling bin and splurge on anything metallic. The joy of a live test, though, is that there’s no flattering camera angle or clever editing to disguise the absolute failure of your million-dollar bot to jog slowly in a straight line. Every machine tottered along like an ageing former president, surrounded by anxious bodyguards desperate to keep their precious charge upright. The final human/robot race completion scorecard was 12,000 to 6, with the best robot completing the 13-mile course in a shabby 2 hours 40 minutes.

Yet, a more nuanced view is that none of these robots would have been able to run, let alone finish the race a mere 18 months ago. Moreover, I can guarantee that given the pace of development and massive investment, next year’s marathon will be a very different story. For now, like AI models, the hype cycle for robots is still churning. But while the bots still can’t finish a fun run without face planting, it’s still worth keeping your running shoes handy because it’s only a matter of time before it’s the robots who are laughing.

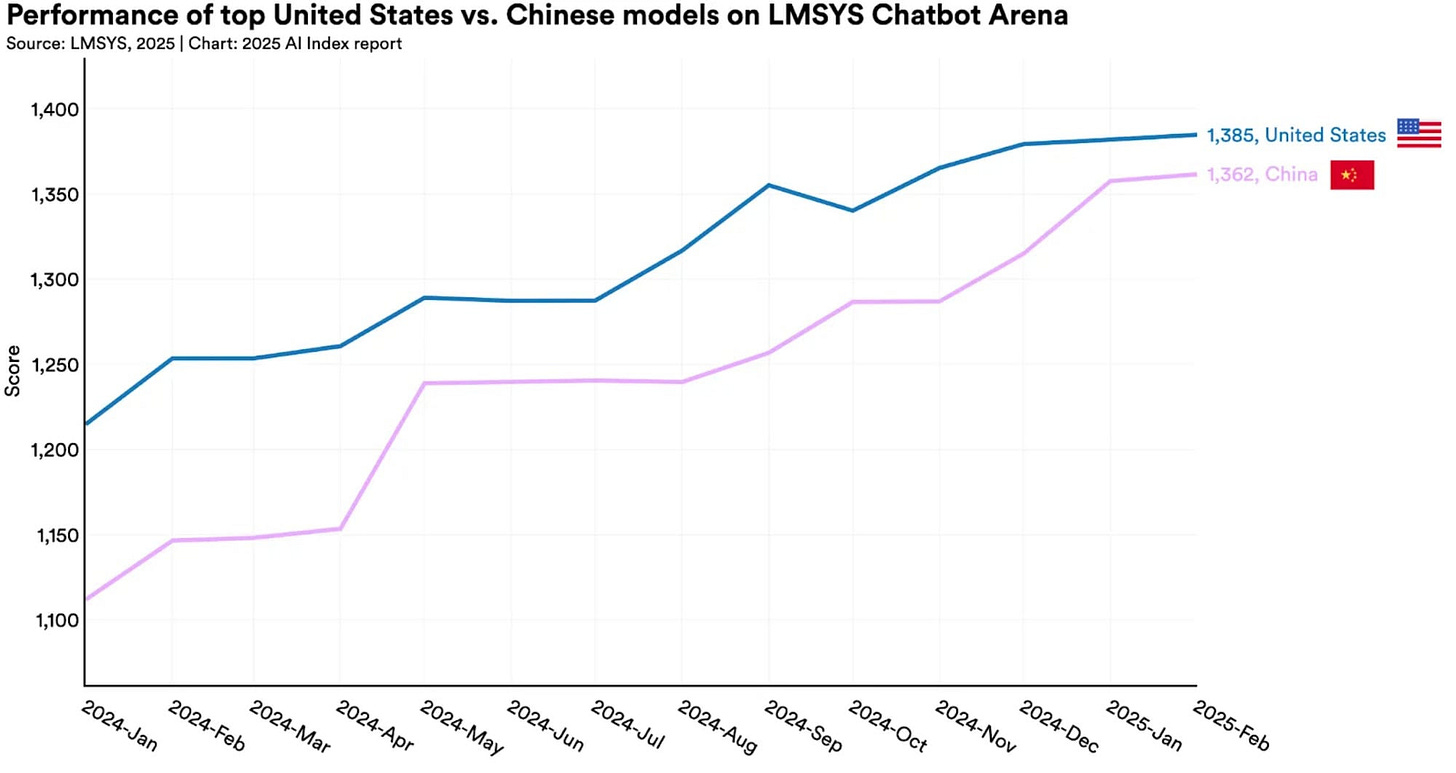

The 2025 AI Index Report: A US-China Tech War

Good, rigorous data on AI remains scarce. Marketing masquerading as research pollutes the well, and the "Deep Re/search" feature embedded in major LLMs continues to blur the line between fact and fiction. So Stanford University’s annual AI Index Report is normally a sane and increasingly lone voice of reason when it comes to a true picture of where AI is headed.

Key takeaways:

China has caught up with U.S. AI capabilities and is on track to achieve parity—or even overtake the U.S.—driven by national pride and a sweeping government AI plan. JD Vance’s comments dismissing Chinese innovation as “peasant tech” have only served to fuel the fire of Chinese AI nationalism. The Chinese government’s recent plan for AI dominance is breathtaking in its scope — basically like a Brit on holiday in the Costa del Sol it’s “(AI) Chips with everything”.

…however the U.S. is spending on AI like there is no tomorrow. U.S. private AI investment hit $109 billion in 2024, nearly 12 times higher than China's $9.3 billion and 24 times the UK's $4.5 billion. The gap is even more pronounced in generative AI, where U.S. investment exceeded the combined European Union and UK total by $25.5 billion, up from a $21.1 billion gap in 2023.

Getting better, faster. On every benchmark AI performance is improving at roughly 50% every quarter. It shows potential to outpace humans in video generation and programming.

AI costs falling through the floor. Inference, the compute needed to allow models to think before they create that strikingly original image of you as an action figure, fell more than 280-fold since 2022, hardware costs decreased by 30% annually, and energy efficiency improved by 40% each year.

AI will soon be everywhere. AI is quickly being integrated into daily life, expanding from healthcare to transportation. In 2023, the US FDA approved 223 AI medical devices, while Baidu and Waymo launched autonomous driving in several cities…and these are only 2023 figures!

This is a hefty report that can be summed up in one (albeit long) sentence: AI is getting better, faster and cheaper, spreading everywhere, with much of the fresh work coming from China, whose researchers now rival the Americans—though the US is still outspending everyone else by a mile.

Experiments in AI - just how good is OpenAI’s latest model?

What seems like decades ago in AI terms (i.e. about 18 months), I was pitching an LLM-based tool to a client that wanted to expand its due diligence business in Africa. In plain English, due diligence means investigating a company or person’s background to ensure that they are kosher and unlikely to screw you over in a current or future transaction. My pitch to my client was that they should let an AI loose on their proprietary data and automate the grunt work of gathering and analysing publicly available information, known as Open Source Intelligence (OSINT).

This would free up their analysts to do the really valuable human intelligence stuff. At the time, AI efforts were pretty poor, so I thought I’d see how much better the new inference or thinking models are at performing OSINT queries. What a difference ‘deep research’ makes! See the video below. Spoiler alert — it’s very good.

What happens when AI never forgets?

Sam Altman (CEO of OpenAI) revealed this week that ChatGPT will, by default, remember everything you’ve ever asked it. From your faintly embarrassing “remind me how to do multiplication” query to the highly personal queries, every prompt is now fodder for its memory, and soon every mainstream model is likely to do the same.

The swagger with which this arrives is staggering. Barely a year ago Microsoft had to yank its ill-named Recall feature, which quietly snapped your screen every 20 seconds so it could “help” you later. Yet barely 12 months later, what the Microsoft engineer who thought up Recall must have felt was a career-ending gaffe, has now been dusted off and repackaged as “a truly adaptive assistant—one that grows with you, tailors to your workflow, and reduces friction in repeat interactions”.

There are so many obvious pitfalls with leaving this setting on in ChatGPT and other LLMs:

Stray confessions never die – the model can resurrect details you wish you’d never shared.

“Private” was never private – we’ve known this all along but OpenAI has just said the quiet part aloud: your chats were always training data.

Inference is racing ahead – inference-based models that take the time to think and research before coming up with an answer and are now so good at joining the data dots that the latest LLMs can pinpoint where a photo of an object with no discernible geographic information. Just imagine what it could do with your full chat history!?

You are the product – free-tier users gushed about OpenAI’s generosity, but those chats have allowed it to build million-line dossiers of hopes, fears and insecurities faster than Google ever managed. No wonder Altman mused about how easy it would be to create a social network based on ChatGPT’s billion weekly users.

The near-total scrapping of guardrails in the latest LLMs shoves privacy, clarity and user choice to the back of the queue. Tech firms may win the profit race by letting their models remember everything, but an AI that never forgets still needs owners who do.

What we’re reading this week

Anthropic warns fully AI employees are a year away

A new tool can show you how much electricity your chatbot messages consume.

Instagram is using AI to find teens lying about their age and restricting their accounts.

Meta rolls out live translations to all Ray-Ban smart glasses users.

The United Arab Emirates is preparing to become the first country in the world to use AI to help write its national legislation.

Fintech founder charged with fraud after ‘AI’ shopping app found to be powered by humans in the Philippines.

That's all for now. Subscribe for the latest innovations and developments with AI.

So you don't miss a thing, follow our Instagram and X pages for more creative content and insights into our work and what we do.